The benefits of mesh technologies

Here we discuss the benefits we’ve seen in implementing a Service Mesh here at Grabyo and the rationale for using AWS App Mesh over competitors offerings.

To briefly summarise understand what a service mesh is, it is a way of allowing a distributed system’s components to interact with one another using a dedicated infrastructure layer. Istio has an excellent paragraph on this topic. We would recommend you read that if you are unfamiliar with the concepts before continuing.

Background

When we started our journey into microservices development, we observed several traditionally good patterns but grew increasingly nonsensical as we expanded the number of services we had. But, of course, we also host our infrastructure entirely in AWS, so many of the problems and decision making processes here are made with that in mind.

Tech Stack

Our infrastructure consists of a monolith application hosted in EC2 and microservices hosted in ECS Fargate. We also have some services running on AWS Lambda.

Why Fargate?

At the time of our containerisation migration, our development team was just 10-15 engineers. EKS didn’t have Fargate support, and we didn’t have the engineering resource to manage a Kubernetes cluster hosted on EC2. So having a managed orchestration platform made a lot of sense for us.

Requirements

Load Balancing

One was load balancing. Previously having a load balancer sitting in front of our monolith application was fine, but as we split out our services, we required a load balancer per microservice. An idle load balancer in AWS without traffic costs in the region of $15-16/month and adding a new one of this per service quickly increases our AWS Load Balancing costs.

Intelligent Routing

Several years ago, we had great success in our internally named “Keep It Within The Availability Zone” (KIWTAZ – pronounced “key-taz”) project whose sole purpose was to ensure traffic that could remain within the AZ did. AWS data transfer costs affect us a lot, and by preventing unnecessary cross-AZ data transfer, we saw a $10,000+ reduction in our monthly data transfer costs. If we wanted to keep costs down, running a load balancer per AZ per microservice was not feasible when considering the financial implications above.

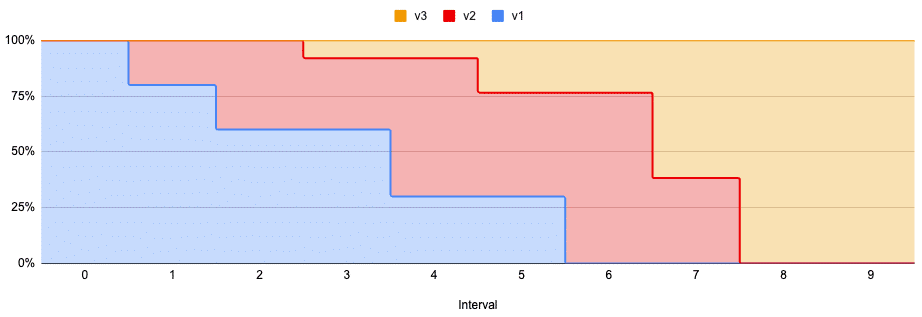

Another benefit of intelligent routing and traffic shaping is to enable canary releases. Canaries are an increasingly common pattern of microservices deployment strategies that allows monitoring a deployment for application errors and rollback if a particular threshold is breached. With traditional load balancing mechanisms, particularly in AWS, traffic shaping is complex and manual. Services such as AWS Code Deploy limit to two concurrent deployments. What happens if we want to traffic shape between 3 or more simultaneous deployments? In the world of microservices development and a quick feedback/release cycle, this is not unfeasible:

Security

One of the growing concerns is Lateral Movement Attacks, whereby a compromised service probes all endpoints available to it for data exposure. Suddenly, our “walled garden approach” to security was no longer appropriate.

Why a Service Mesh?

A Service Mesh piqued our interest because they typically contain many features found in traditional infrastructure architectures at a layer 7 level. One such example is load balancing without the use of dedicated load balancing resources. In addition, an attribute-based DNS lookup is quite a common feature of many service mesh technologies, solving our KIWTAZ requirement. Another selling point is that mTLS is supported by most major service mesh providers. Finally, advanced routing and traffic distribution is something that is again a feature becoming more common.

But it’s not the features that drove us to the decision to adopt a service mesh. It was simply that all these features are managed and governed in one single tool, which aids in reducing management overhead and learning many different technologies to achieve many other things.

We have a robust DevOps methodology at Grabyo, whereby service owners take responsibility for their application, infrastructure, security, & CI/CD pipelines. To do that, we run a myriad of training sessions. Training service owners with one tool (the service mesh) are preferable to many different ones to achieve the same thing.

Options

We considered three options, two of them seriously.

Istio

We don’t use Kubernetes. This immediately removes Istio from contention.

Hashicorp Consul

Consul offers some pretty incredible features, such as Consul Connect, which allows a single mesh across multiple regions, cloud architectures, and even localhost.

Consul also supports DNS tagging, so we could, for example, use eu-west-1a.udvr.service.grabyo as a DNS record to lookup a healthy node of the UDVR service in the eu-west-1a AZ. By omitting the tag here, we can use effective traffic splitting between v1 and v2 but cannot do weighting straight from the control plane (e.g. we could not use v1:3 & v2:1 for a 75:25% split). That would require a service LB sitting in front of the service, one for each AZ.

mTLS is something that Consul supports nicely, with its integration into Hashicorp Vault. As open-source Vault users ourselves, there could be an excellent integration here for certificate distribution and revocation.

AWS App Mesh

App Mesh is a more recent addition to the ever-growing list of AWS services.

App Mesh is a managed control plane that allows users to monitor the infrastructure by governing the routing policies without redeploying application code.

It natively integrates with AWS services such as Cloud Map, Route53 and ECS, X-Ray and Cluodwatch to offer a robust suite of features, including all the ones given in our requirements. There is also the promise of Lambda supporting the mesh, but we’ll need to be patient on this one.

Cloudmap supports attribute-based lookup via Cloudmap and offers advanced traffic shaping and routing through weighted routes, perfectly supporting our canary requirements. Integrations with X-Ray and Cloudwatch allow monitoring components of distributed platforms to a granular level and seeing live the results within shifts in traffic shaping. Finally, App Mesh supports mTLS via Private ACM to ensure service-to-service authentication and prevent lateral movement attacks.

Outcome

Consul has a vast and growing number of more than desirable features in an ever-changing platform, but our relationship with AWS and pay-per-usage approach was more suited to our needs. We also strive to use managed services where possible as a result of our team size. Unfortunately, Consul does not have a managed Consul platform for AWS at the time of writing, but it’s likely that one is coming if not already confirmed.

Additionally, we would be responsible for the Vault cluster distributing certificates out to all our nodes across the platform. While not detrimental, relying on the features of Private ACM is preferable to use for ease of management, scalability and availability.

Why AWS App Mesh

Being an AWS Partner and relying on AWS products puts us in a solid position to use their products. We work closely with Amazon and have a healthy relationship with them, built on a years-strong partnership that has grown stronger with time. Reaching out to the App Mesh service team for both support and advice is hugely valuable compared to running open-source Consul clusters and being our own support.

Closing comments

While we are happy with our implementation choice and rationale, AWS App Mesh is not the 10/10 scoring product we would like it to be at this moment in time. App Mesh’s mTLS support for ECS Fargate is convoluted and not the first-class integration we would like to see from the team at Amazon. Still, we are confident that improvements will be coming and as an AWS customer using their services, we trust they will listen to feedback.

We’re hiring!

We’re looking for talented engineers in all areas to join our team and help us to build the future of broadcast and media production.