Grabyo join W3C 2022

Last year we were pleased to be invited to contribute to the W3C/SMPTE Joint Workshop on Professional Media Production on the Web.

The W3C is the foremost standards consortium which makes recommendations for standards on the web platform. SMPTE is a standards organisation focused on international technical standards for motion-imaging content.

This workshop aimed to gather recommendations to improve support for professional media workflows on the platform. Engineers from many companies such as Google, Microsoft and ByteDance presented their use cases and suggestions.

Why do professional media production on the web?

Video production work is very intensive on computing resources. This work is typically done on high-end workstations, dedicated media processing devices or even dedicated server farms.

The most performant approaches are usually low-level, where system-level overhead can be minimised, and developers have fine-grained access to memory, CPU and GPU to optimise performance. This is in contrast to the web platform, where everything runs inside a web browser, which exposes very little control of the underlying system to web pages.

However, the web has one huge advantage: Portability. A web application (in theory) will work on any device capable of running a web browser in any location. The idea that a business could replace expensive, bespoke, depreciating hardware with any device that can run a web browser, anywhere with an internet connection, is clearly appealing from a flexibility perspective. There’s no hardware procurement, installation, maintenance or transportation – users login to the website and start creating from anywhere.

Encoding a high-quality video in a web browser on your laptop will take a long time, so we still want to offload the heavy work to the cloud, where we can provide more powerful and more specialised encoders on-demand. In addition, the web browser can be used to provide an interface for creators to preview their video and make their creative decisions.

Next-gen web media capabilities

Media capabilities on the web took a hit with the death of Flash, but web-native capabilities have been improving significantly since the introduction of HTML5. Some of the most significant early additions include HTML5 Media Elements, Canvas, Web Audio and Media Streams.

Using these tools, Grabyo was able to create powerful and disruptive media production tools for the web platform, but there were still limitations:

- A web page could only make use of a single CPU thread.

- A web page had no low-level memory management features.

- A web page could not access the GPU or leverage video rendering-acceleration technologies like NVIDIA’s CUDA.

- A web page could only run Javascript code, which is inherently less performant than compiled languages.

- These APIs do not expose the entire video-rendering pipeline, preventing developers from optimising that part of the process for their use cases.

In answer to this, more advanced capabilities were introduced to the Web Platform:

- Web Workers allow a web page to use an additional CPU thread to do work without blocking the page’s main thread.

- WebAssembly allows lower-level compiled languages such as C++ or Rust to be used in the browser for improved performance over Javascript.

- WebGL and WebGPU enable utilising the device’s GPU to provide powerful rendering capabilities.

- Web Codecs provide lower-level access to the browser’s video encoding and decoding pipelines.

With these newer tools, developers have been able to push media software on the web to new heights, but more can be done to enable more powerful workflows on the platform.

Workshop

We presented to the workshop on two topics:

Media Element accuracy and synchronization with the DOM by Sacha Guddoy (Grabyo)

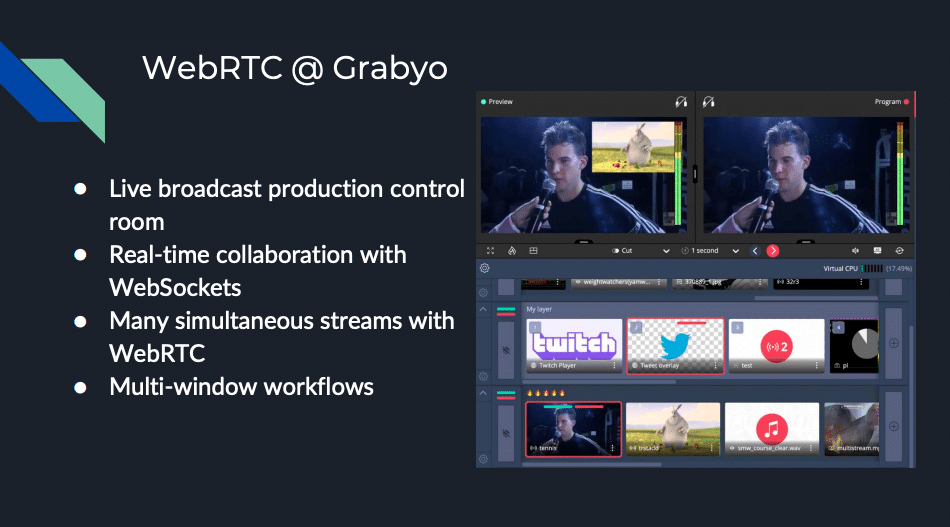

WebRTC in live media production by Sacha Guddoy (Grabyo)

Media Element accuracy

There has been an exciting discussion on this topic on the W3C’s Github: Frame accurate seeking of HTML5 MediaElement · Issue #4 · w3c/media-and-entertainment

HTMLVideoElement.requestVideoFrameCallback() is a proposed interface that would help enormously alleviate some of these issues by exposing more advanced metadata in time with each form of the video. This will also help improve performance by only executing the callback function at the frame rate of the video rather than the refresh rate of the browser/display. As of writing, however, this feature is only supported in Chromium-based browsers.

DOM Synchronisation

In many cases, we want to synchronise the behaviour of other elements of the web page with the media playback, e.g. a video timestamp or progress bar.

DOM updates and video updates are running in separate threads, so by the time your update is represented in the DOM, the video has already moved on and may be displaying the next frame. Existing implementations will likely be good enough for most applications, but those who care about true frame accuracy will face challenges here.

A proposed specification for a TimingObject interface is intended for synchronising things like Media Element playback, DOM updates, and CSS animations. Still, after several years, this has not gained much traction. Timing Object.

WebRTC

Stream Synchronisation

One of our use cases for WebRTC is a live production tool where a video producer can switch between and mix multiple live video streams. For the best user experience, our video feeds need to be in sync as much as possible.

When live video data is being transmitted over the internet, there can be a loss in information or a delay in playback due to network issues like packet loss and latency. In this scenario, videos transmitted on different connections can become out of sync, so we need to be sure that they are being re-synced where possible and possibly alert the user when there are sync issues.

WebRTC Insertable Streams

Insertable Streams is a new API that enables hooking into the decoding and encoding pipelines of WebRTC. This allows the developer to insert transformation or analysis steps into the video decoding/encoding, which was previously impossible. For example, this could transform video in real-time in the browser for effects such as Snapchat-like filters or blurred backgrounds like Google Meet.

This feature has launched in Chromium but sadly has not been picked up anywhere else yet.

Outcomes

The W3C report can be read here: W3C/SMPTE Joint Workshop on Professional Media Production on the Web

There were already proposals underway in the W3C’s various groups and committees on many of the topics covered, but sharing use cases helps guide the development of specifications and highlights the demand for certain features.

There was simply a lack of alignment between browser vendors in other cases. Chromium leads the pack on most media features and even forges ahead with non-standard proposals, often in origin trials. Firefox expends a lot of effort in this area but still trails behind in many cases. Safari remains the “North Korea of browsers“ in the media landscape, often lagging far behind the others in implementation and are mainly opaque in terms of future plans.

The workshop proposes forming a task force within the W3C to focus on professional media use cases that match the workshop’s scope.

Other topics raised

- WebCodecs – Exposing demuxing process, quality controls, improving native codec support.

- WebAudio – API improvements, latency challenges and synthesised speech.

- WebRTC – Signalling protocols, production-quality codecs, real-time captioning.

- WebAssembly – 64-bit support for heap management, SIMD support, reducing memory copies.

- File System Integration – Origin Private File system to work with tremendous files File System Access

- Metadata – Preserving metadata from media’s source to the end product.

- Accessibility – Highlighting the need for considering accessibility requirements in media authoring tools.

Summary

This was a rare opportunity to have expert media engineers come together from all industry levels and share the limitations they are hitting in the web platform. The participants were all working on the cutting edge of web technology. It was exciting and inspiring to see all the cool things people were doing with the different technologies and the varied ways they pushed and took advantage of the web.

I learned a lot from watching these talks and the conversations and from my research in preparation for my presentations. Thank you to the W3C for inviting us at Grabyo to be a part of the workshop, and I look forward to continuing to work together in the future.

We’re hiring!

We’re looking for talented engineers in all areas to join our team and help us to build the future of broadcast and media production.